A few weeks ago I had to create a simple X-axis based parallax ‘engine’ for a project. Just basic horizontal movement, which is scaled based on an abstract z value. Well, somehow that turned into a full 3D engine. So I am continuing to build on what I had started in order to create my own Flash 3D engine, not because I think I can build a better Papervision, Away3D, or Five3D, (because I’m sure I can’t) but rather in order to learn. Since I end up using 3D engines fairly often now, I figure it’s good experience for me to build my own and see how it can work from the ground up.

So the first thing I learned, which really surprised me, is that building a 3D engine is easy. I’ll be the first to admit that math isn’t necessarily my strong suite, so believe me when I say it’s not too difficult. There are only a few tricky equations you need, and there’s plenty of information on the web since almost everyone it seems has created a 3D engine at one point or another. The tricky part, however, is getting the engine optimized and to run smoothly. That’s really what my challenge is.

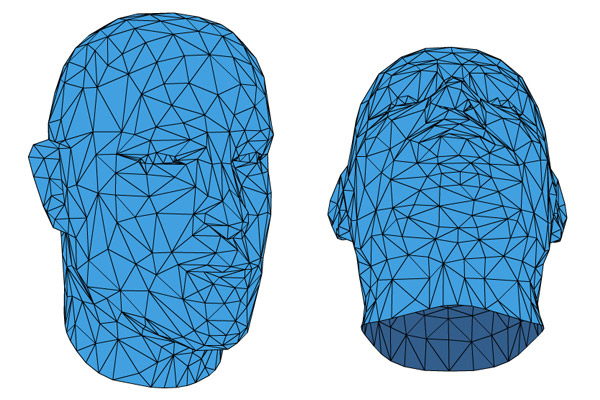

I was able to get basic translation (which runs horrendously slow right now) as well as rotation implemented. I expanded on that to create a mesh, which can accept data imported by an .obj file (very easy to write a parser for) and create geometry. The mesh, like most in other 3D engines, consists of an array of vertex points and faces, which themselves are a collection of references to those vertex points. The mesh overall or individual faces can accept a material, as well as an optional back material for the reverse of the normal for when single sided is false. I calculate the z-depth for sorting based on the centroid of the polygon, which is probably not the fastest or most accurate way, but so far it has seems to work pretty well. Currently I have not yet tackled bitmaps or lighting, but that’s an interesting challenge for down the road.

On the note of optimization, I’ve noticed that Papervision, Away3D, and Five3D all use a scene3D and/or view3D. That said, all of your 3D ends up in more or less abstract container that handles the rendering. I suspect the reasoning for this is so that a render function only has to be called once, and all the appropriate transforms can happen here. In my experiment, I have decided against this, and probably at the stake of performance. The reason I don’t want to include a viewport or scene is so that the user can have more direct access to how the ‘Sprite3D’ is placed on the stage and it’s relation to other elements. I would also like to see how much can be achieved without having to use a container for the rendering process. Regardless, it’s been a fun learning experience so far.

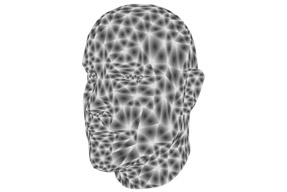

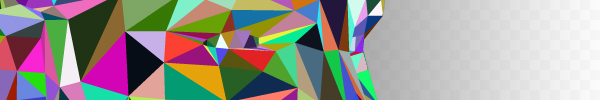

Some demos below. The first example shows some of the basics. The second shows an experimental gradientFill material (creates a voronoi effect) and the third show an experimental shader that colors the faces in relation to their space in 3D.